Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

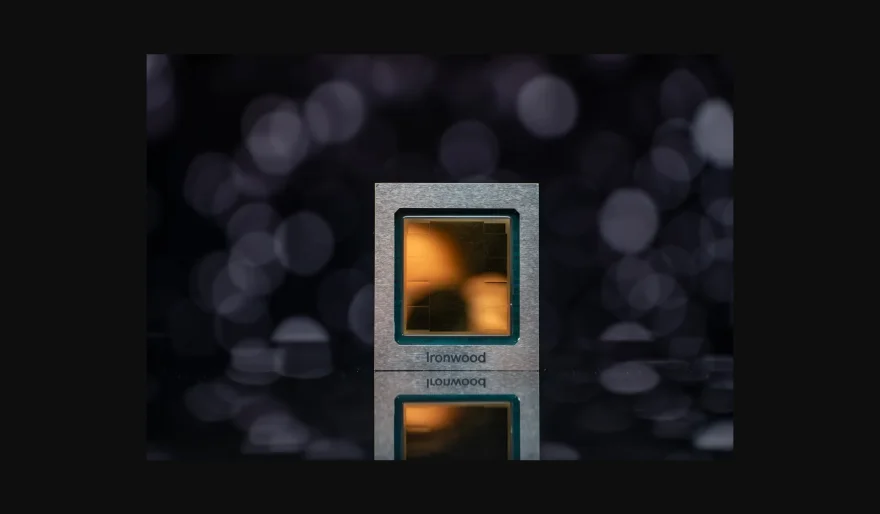

Google Unveils Ironwood: Its Most Powerful AI Accelerator Chip Yet

4 min read At Cloud Next, Google introduced Ironwood, its 7th-generation Tensor Processing Unit (TPU) designed to enhance AI inference tasks. Ironwood is the most powerful and energy-efficient TPU to date, available in 256-chip and 9,216-chip clusters for scalable AI workloads. This launch strengthens Google Cloud's position in the competitive AI accelerator market, positioning it as a key player in the race against rivals like Nvidia, Amazon, and Microsoft. April 09, 2025 13:07

At this week’s Cloud Next conference, Google introduced Ironwood, the seventh generation of its Tensor Processing Unit (TPU), marking a significant leap in AI accelerator technology. Tailored for inference tasks—the process of running AI models—Ironwood promises to boost AI model performance across Google Cloud services.

Unmatched Power and Efficiency

Ironwood is Google's most powerful and energy-efficient TPU to date, according to Amin Vahdat, Google Cloud's VP. Designed specifically to power inference AI models at scale, it brings a new level of performance to Google's cloud infrastructure. The chip will be available in two configurations:

-

256-chip cluster

-

9,216-chip cluster

This flexibility allows customers to scale their AI workloads based on their specific needs.

Strategic Move in the AI Accelerator Race

The introduction of Ironwood comes as competition in the AI accelerator space intensifies. While Nvidia has long been a leader in this sector, companies like Amazon and Microsoft are ramping up their own in-house AI solutions. Amazon offers chips like Trainium, Inferentia, and Graviton through its AWS platform, while Microsoft provides its Cobalt 100 AI chip through Azure.

As the demand for AI-powered services skyrockets, tech giants are racing to develop more powerful and energy-efficient hardware solutions to run sophisticated AI models. Ironwood positions Google Cloud as a strong contender in this competitive landscape.

Ironwood’s Impact on Google Cloud Customers

Ironwood’s release later this year will provide Google Cloud customers with a cutting-edge tool for deploying AI models at scale. Whether for machine learning, data analysis, or other AI-driven applications, Ironwood is expected to offer significant advancements in processing speed and power efficiency, making it a valuable resource for businesses looking to leverage generative AI and other next-gen technologies.

With the increased focus on AI in industries ranging from healthcare to finance, Ironwood could become a crucial player in helping businesses achieve more effective AI deployment in the cloud.

Google’s launch of Ironwood cements its position as a leader in cloud-based AI infrastructure and marks a significant milestone in the ongoing evolution of AI accelerator technology.

AI Agents

AI Agents